Abstract

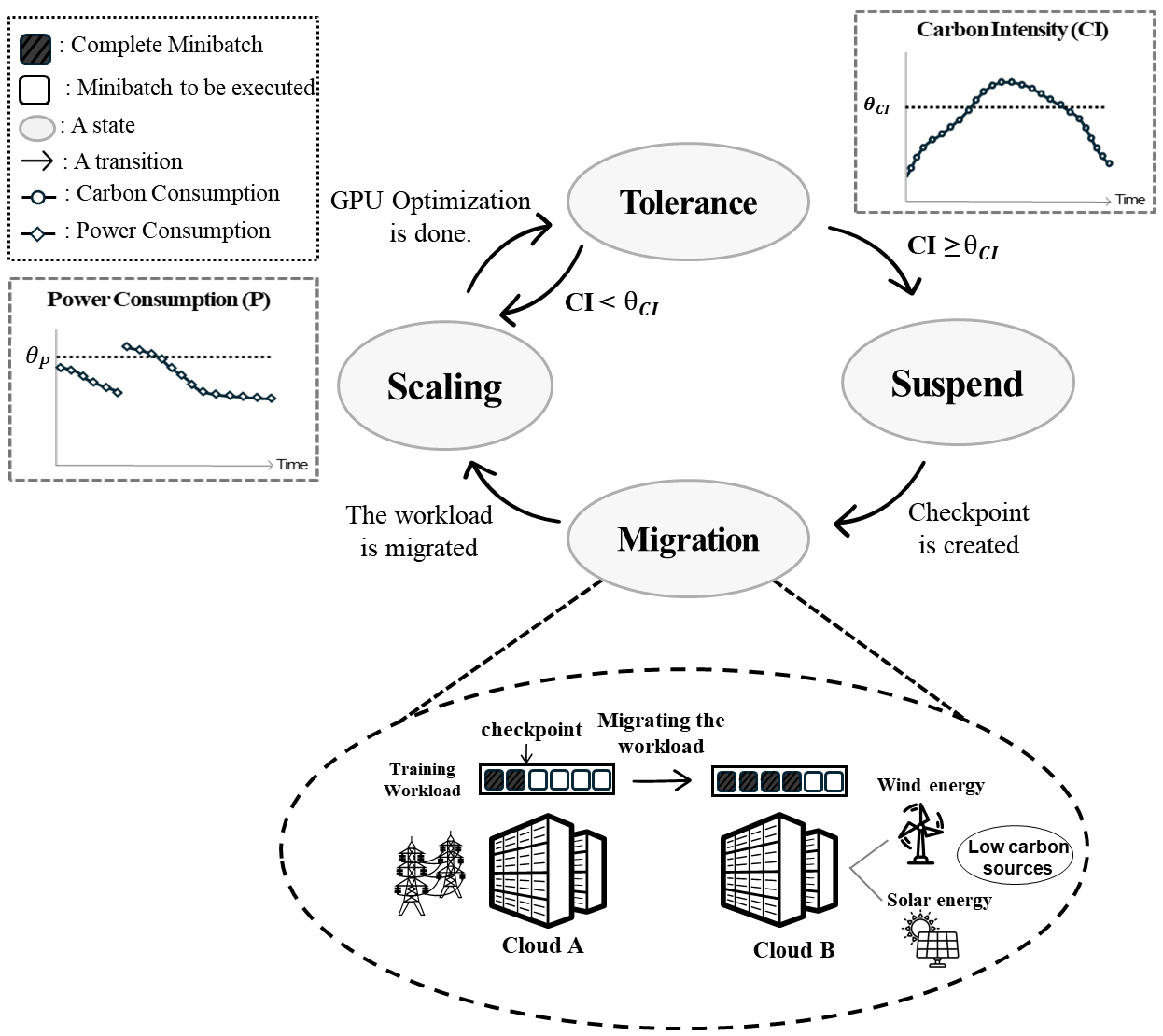

Recently, many deep learning models have been trained in geographically distributed data centers. The carbon emissions produced by training the models may pose a significant threat to climate change like increasing temperatures. Existing studies have a hardship in shifting the workload of training models to a data center with low carbon emissions. So, they fail to ensure low emissions of the workload during training, especially when long-term workloads like Large Language Models~(LLMs) are trained. To cope with this problem, we propose a method that shifts the workload to a cloud with low carbon emissions while enduring a lack of computational resources. Specifically, we define a task scheduler that includes states and their transitions to migrate mini-batches dynamically. Next, we present a fault-tolerant control that optimizes a GPU frequency to adapt to workload variations of training models while guaranteeing its power consumption. Last, we conducted exhaustive experiments using real-world data in terms of carbon emissions, transfer time, and power consumption compared to state-of-the-art methods.

Highlights